IN THE NEWS

Learning Ambidextrous Robot Grasping Policies

INTRODUCTION

Universal picking (UP), or the ability of robots to rapidly and reliably grasp a wide range of novel objects, can benefit applications in warehousing, manufacturing, medicine, retail, and service robots. UP is highly challenging because of inherent limitations in robot perception and control. Sensor noise and occlusions obscure the exact geometry and position of objects in the environment. Parameters governing physics such as center of mass and friction cannot be observed directly. Imprecise actuation and calibration lead to inaccuracies in arm positioning. Thus, a policy for UP cannot assume precise knowledge of the state of the robot or objects in the environment.

One approach to UP is to create a database of grasps on three-dimensional (3D) object models using grasp performance metrics derived from geometry and physics (1, 2) with stochastic sampling to model uncertainty (3, 4). This analytic method requires a perception system to register sensor data to known objects and does not generalize well to a large variety of novel objects in practice (5, 6). A second approach uses machine learning to train function approximators such as deep neural networks to predict the probability of success of candidate grasps from images using large training datasets of empirical successes and failures. Training datasets are collected from humans (7–9) or physical experiments (10–12). Collecting such data may be tedious and prone to inaccuracies due to changes in calibration or hardware (13).

To reduce the cost of data collection, we explored a hybrid approach that uses models from geometry and mechanics to generate synthetic training datasets. However, policies trained on synthetic data may have reduced performance on a physical robot due to inherent differences between models and real-world systems. This simulation-to-reality transfer problem is a long-standing challenge in robot learning (14–17). To bridge the gap, the hybrid method uses domain randomization (17–22) over objects, sensors, and physical parameters. This encourages policies to learn grasps that are robust to imprecision in sensing, control, and physics. Furthermore, the method plans grasps based on depth images, which can be simulated accurately using ray tracing (18, 19, 23) and are invariant to object color (24).

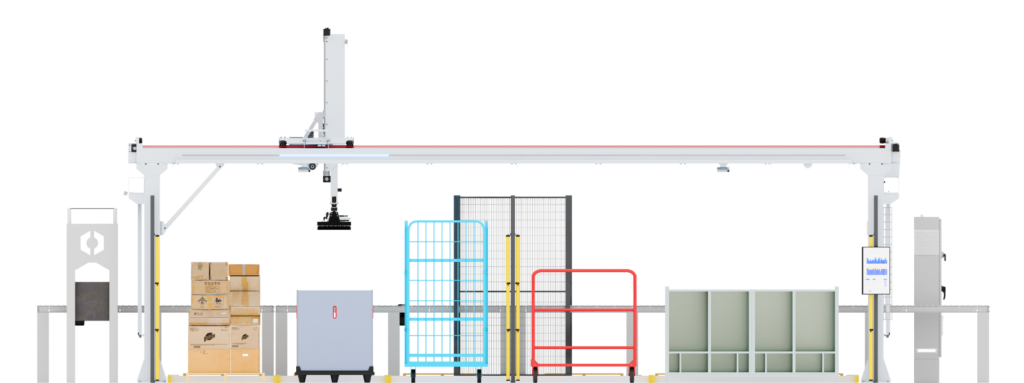

The hybrid approach has been used to learn reliable UP policies on a physical robot with a single gripper (25–28). However, different grasp modalities are needed to reliably handle a wide range of objects in practice. For example, vacuum-based suction-cup grippers can easily grasp objects with nonporous, planar surfaces such as boxes, but they may not be able to grasp small objects, such as paper clips, or porous objects, such as cloth.

In applications such as the Amazon Robotics Challenge, it is common to expand range by equipping robots with more than one end effector (e.g., both a parallel-jaw gripper and a suction cup). Domain experts typically hand-code a policy to decide which gripper to use at runtime (29–32). These hand-coded strategies are difficult to tune and may be difficult to extend to new cameras, grippers, and robots.

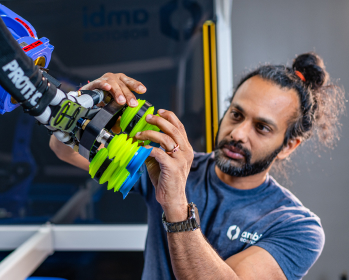

Here, we introduce “ambidextrous” robot policy learning using the hybrid approach to UP. We propose the Dexterity Network (Dex-Net) 4.0 dataset generation model, extending the gripper-specific models of Dex-Net 2.0 (19) and Dex-Net 3.0 (19). The framework evaluates all grasps with a common metric: expected wrench resistance, or the ability to resist task-specific forces and torques, such as gravity, under random perturbations.

We implement the model for a parallel-jaw gripper and a vacuum-based suction cup gripper and generate the Dex-Net 4.0 training dataset containing more than 5 million grasps associated with synthetic point clouds and grasp metrics computed from 1664 unique 3D objects in simulated heaps. We train separate Grasp Quality Convolutional Neural Networks (GQ-CNNs) for each gripper and combine them to plan grasps for objects in a given point cloud.

The contributions of this paper are as follows:

1) A partially observable Markov decision process (POMDP) framework for ambidextrous robot grasping based on robust wrench resistance as a common reward function.

2) An ambidextrous grasping policy trained on the Dex-Net 4.0 dataset that plans a grasp to maximize quality using a separate GQ-CNN for each gripper.

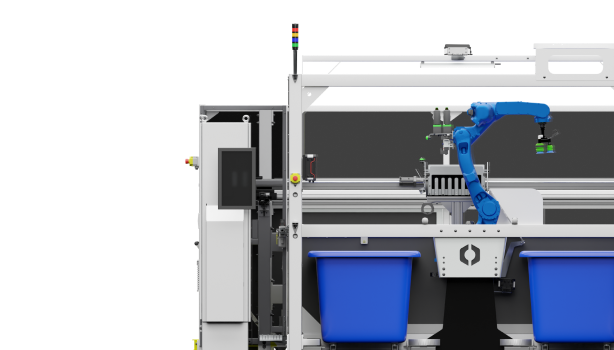

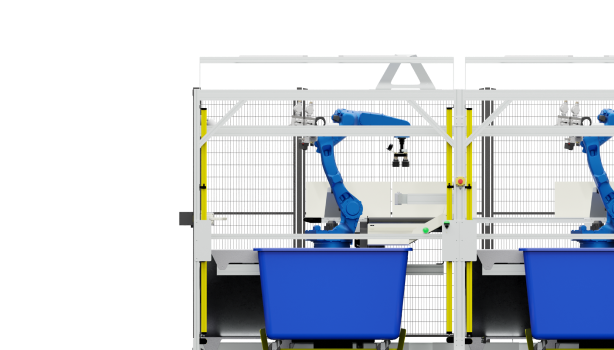

3) Experiments evaluating performance on bin picking with heaps of up to 50 diverse, novel objects and an ABB YuMi robot with a parallel-jaw and suction-cup gripper in comparison with hand-coded and learned baselines.

Experiments suggest that the Dex-Net 4.0 policy achieves 95% reliability on a physical robot with 300 mean picks per hour (MPPH) (successful grasps per hour).

RESULTS

Ambidextrous robot grasping

We consider the problem of ambidextrous grasping of a wide range of novel objects from cluttered heaps using a robot with a depth camera and two or more available grippers, such as a vacuum-based suction-cup gripper and/or a parallel-jaw gripper. To provide context for the metrics and approaches considered in experiments, we formalize this problem as a POMDP (33) in which a robot plans grasps to maximize expected reward (probability of grasp success) given imperfect observations of the environment.

A robot with an overhead depth camera views a heap of novel objects in a bin. On grasp attempt t, a robot observes a point cloud yt from the depth camera. The robot uses a policy ut = π(yt) to plan a grasp action ut for a gripper g consisting of a 3D rigid position and orientation of the gripper Tg = (Rg, tg) ∈ SE (3). Upon executing ut, the robot receives a reward Rt = 1 if it successfully lifts and transports exactly one object from the bin to a receptacle and Rt = 0 otherwise. The observations and rewards depend on a latent state xt that is unknown to the robot and describes geometry, pose, center of mass, and material properties of each object. After either the bin is empty or T total grasp attempts, the process terminates.

These variables evolve according to an environment distribution that reflects sensor noise, control imprecision, and variation in the initial bin state:

1) Initial state distribution. Let p(x0) be a distribution over possible states of the environment that the robot is expected to handle due to variation in objects and tolerances in camera positioning.

2) Observation distribution. Let p(yt|xt) be a distribution over observations given a state due to sensor noise and tolerances in the camera optical parameters.

3) Transition distribution. Let p(xt+1|xt,ut) be a distribution over next states given the current state and grasp action due to imprecision in control and physics.

The goal is to learn a policy π to maximize the rate of reward, or MPPH ρ, up to a maximum of T grasp attempts: where T is the number of grasp attempts and Δt is the duration of executing grasp action ut in hours. The expectation is taken with respect to the environment distribution.

It is common to measure performance in terms of the mean rate v and reliability Φ (also known as the success rate) of a grasping policy for a given range of objects.

If the time per grasp is constant, the MPPH is the product of rate and reliability: ρ = vΦ.

Learning from synthetic data

We propose a hybrid approach to ambidextrous grasping that learns policies on synthetic training datasets generated using analytic models and domain randomization over a diverse range of objects, cameras, and parameters of physics for robust transfer from simulation to reality (17, 20, 22). The method optimizes for a policy to maximize MPPH under the assumption of a constant time per grasp.

To learn a policy, the method uses a training dataset generation distribution based on models from physics and geometry, μ, to computationally synthesize a massive training dataset of point clouds, grasps, and reward labels for heterogeneous grippers. The distribution μ consists of two stochastic components: (i) a synthetic training environment ξ(y0, R0, …, yT, RT|π) that can sample paired observations and rewards given a policy and (ii) a data collection policy τ(ut|xt, yt) that can sample a diverse set of grasps using full-state knowledge. The synthetic training environment simulates grasp outcomes by evaluating rewards according to the ability of a grasp to resist forces and torques due to gravity and random peturbations. The environment also stochastically samples heaps of 3D objects in a bin and renders depth images of the scene using domain randomization over the camera position, focal length, and optical center pixel. The dataset collection policy evaluates actions in the synthetic training environment using algorithmic supervision to guide learning toward successful grasps.

We explore large-scale supervised learning on samples from μ to train the ambidextrous policy πθ across a set of two or more available grippers G, as illustrated in Fig. 1. First, we sample a massive training dataset from a software implementation of μ. Then, we learn a GC-CNN Qθ,g(y, u) ∈ [0, 1] to estimate the probability of success for a grasp with gripper g given a depth image. Specifically, we optimize the weights θg to minimize the cross entropy loss ℒ between the GQ-CNN prediction and the true reward over the dataset denotes the subset of the training dataset D containing only grasps for gripper g. We construct a robot policy πθ from the GQ-CNNs by planning the grasp that maximizes quality across all available grippers a set of candidate grasps for gripper g sampled from the depth image.